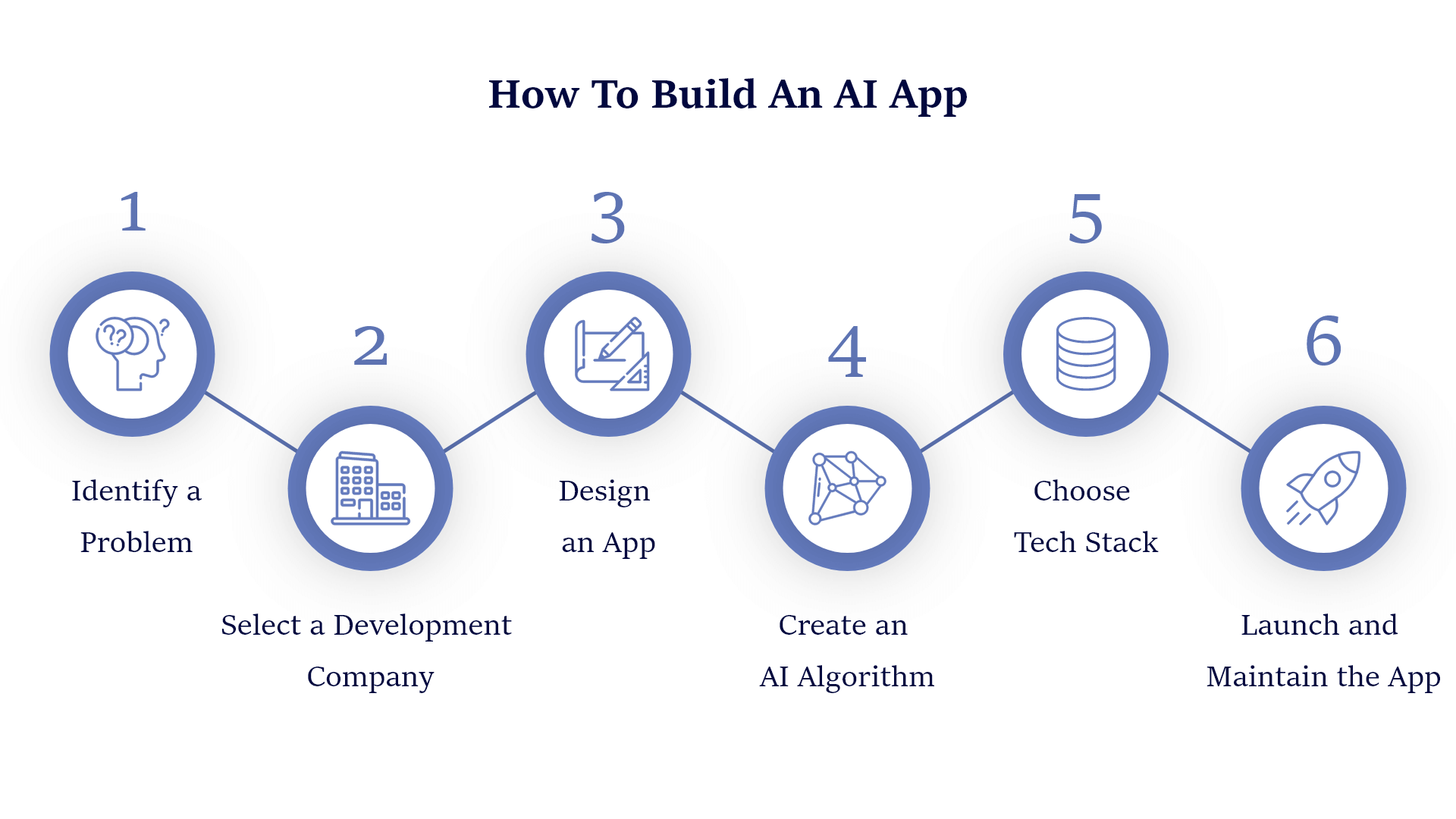

To build an AI, you can follow these steps:

-

Define the problem: Identify the problem you want the AI to solve. For example, if you want to build an AI for image recognition, the problem could be to identify the object in the image.

-

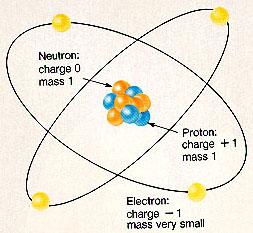

Collect and preprocess the data: Gather a dataset relevant to the problem. For image recognition, you might need a dataset of images with their corresponding labels. Preprocess the data by cleaning it, normalizing it, and handling missing values.

-

Choose a model: Select a suitable machine learning model for the problem. For image recognition, you might choose a convolutional neural network (CNN).

-

Train the model: Split the dataset into a training set and a testing set. Train the model on the training set using an optimization algorithm like stochastic gradient descent (SGD) or Adam.

-

Evaluate the model: Test the model’s performance on the testing set. Evaluate the model using appropriate metrics like accuracy, precision, recall, and F1-score.

-

Improve the model: If the model’s performance is not satisfactory, you can try to improve it by tuning hyperparameters, using a different model, or collecting more data.

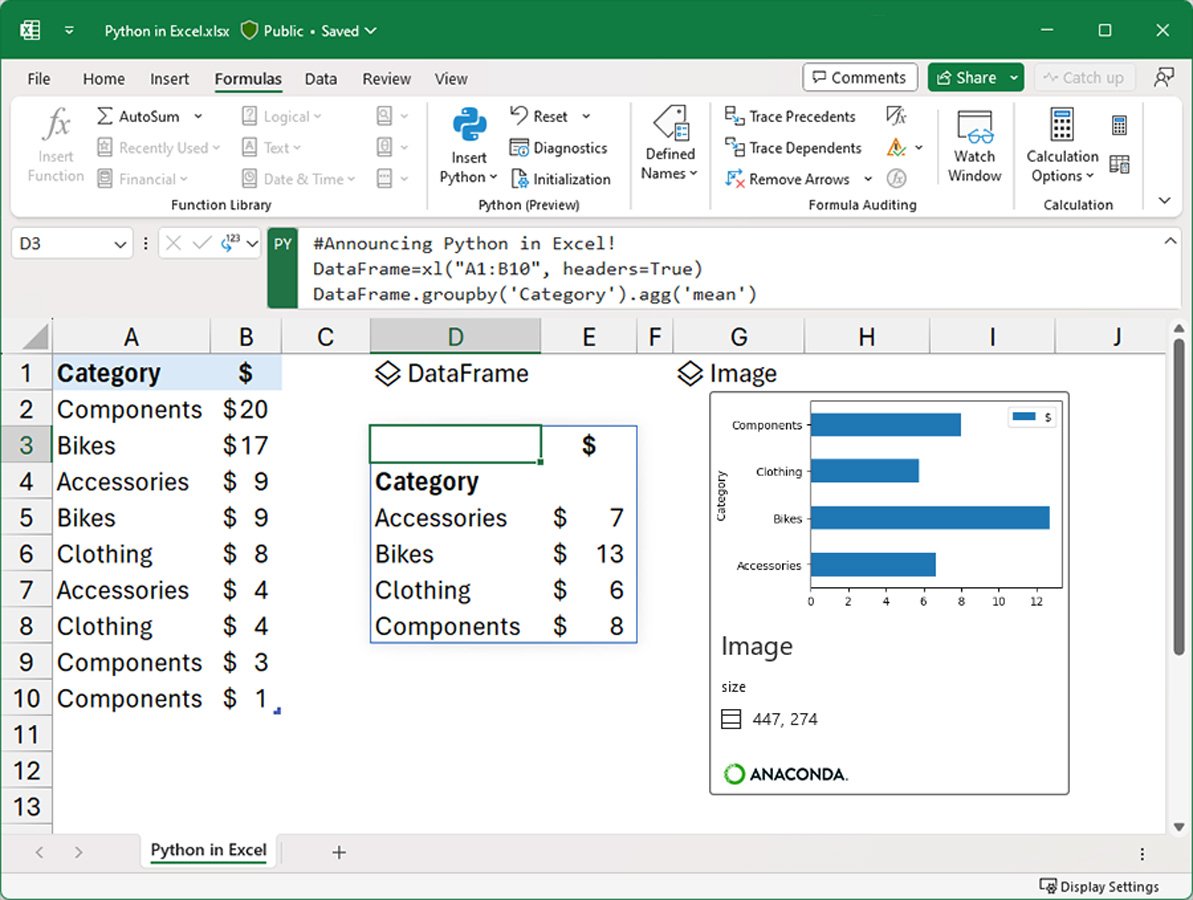

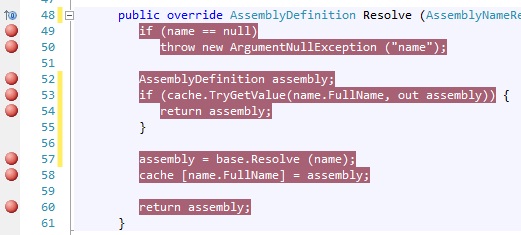

Here’s an example of how to build an AI for image recognition using a CNN in Python:

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Conv2D, Flatten, MaxPooling2D

# Load the MNIST dataset

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# Preprocess the data

x_train = x_train.reshape(-1, 28, 28, 1)

x_test = x_test.reshape(-1, 28, 28, 1)

x_train, x_test = x_train / 255.0, x_test / 255.0

y_train = tf.keras.utils.to_categorical(y_train, 10)

y_test = tf.keras.utils.to_categorical(y_test, 10)

# Define the model

model = Sequential([

Conv2D(32, (3, 3), activation=’relu’, input_shape=(28, 28, 1)),

MaxPooling2D((2, 2)),

Conv2D(64, (3, 3), activation=’relu’),

MaxPooling2D((2, 2)),

Flatten(),

Dense(128, activation=’relu’),

Dense(10, activation=’softmax’)

])

# Compile the model

model.compile(optimizer=’adam’, loss=’categorical_crossentropy’, metrics=[‘accuracy’])

# Train the model

model.fit(x_train, y_train, epochs=5, validation_data=(x_test, y_test))

# Evaluate the model

loss, accuracy = model.evaluate(x_test, y_test)

print(f’Test accuracy: {accuracy}’)

This code uses the MNIST dataset, which is a dataset of handwritten digits. It trains a CNN model on the training set and evaluates its performance on the testing set. The model’s performance is measured using accuracy.

About Author

Discover more from SURFCLOUD TECHNOLOGY

Subscribe to get the latest posts sent to your email.