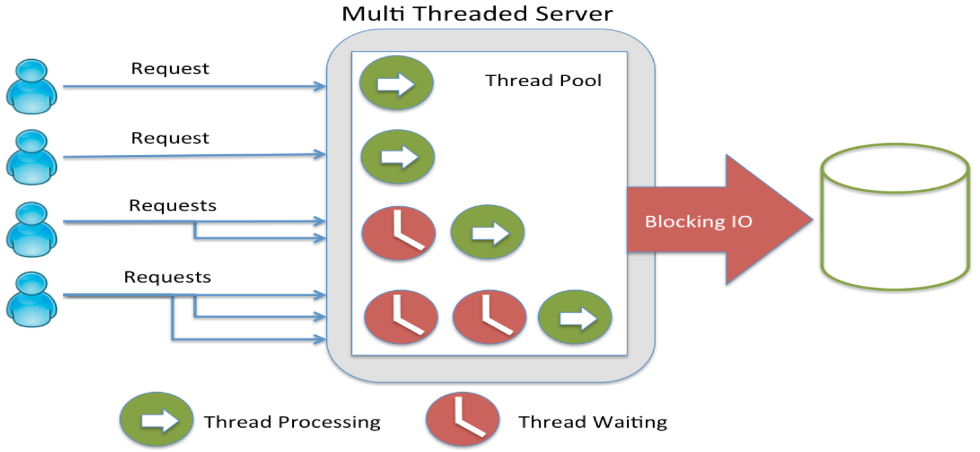

To create a thread pool in Python that can handle multiple requests to the same URL, you can use the following steps:

1. Import the `ThreadPoolExecutor` class from the `concurrent.futures` module.

2. Create a function that will fetch the URL and return the response.

3. Create a list of URLs that you want to fetch.

4. Create a `ThreadPoolExecutor` with a maximum number of workers that is equal to the number of URLs you want to fetch.

5. Use the `map` method of the `ThreadPoolExecutor` to call the `fetch_url` function for each URL in the list.

6. Iterate over the results and print them out.

Below are the basic instructions on how to execute and build the requested code as required:

import requests

from concurrent.futures import ThreadPoolExecutor

def fetch_url(url):

response = requests.get(url)

return response.text

urls = [‘https://www.google.com’, ‘https://www.yahoo.com’, ‘https://www.facebook.com’]

with ThreadPoolExecutor(max_workers=3) as executor:

results = executor.map(fetch_url, urls)

for result in results:

print(result)

This code will create a thread pool with three workers and will fetch the three URLs in parallel. The results will be printed out in the order that they are received.

import requests

from concurrent.futures import ThreadPoolExecutor

def fetch_url(url):

response = requests.get(url)

return response.text

urls = [‘https://www.google.com’, ‘https://www.yahoo.com’, ‘https://www.facebook.com’]

with ThreadPoolExecutor(max_workers=3) as executor:

results = executor.map(fetch_url, urls)

for result in results:

print(result)

About Author

Discover more from SURFCLOUD TECHNOLOGY

Subscribe to get the latest posts sent to your email.